SHREC 2020: Track on

recognition of sequences of gestures from fingers' trajectories

General description

This track is dedicated to the problem of recognizing several gestures of different kinds in sequences of hand skeletons poses captured by a Leap Motion tracking devices, simulating a realistic context of Virtual Reality of Mixed reality interaction.

Given a set of examples of segmented and labelled gestures, participants should be able to process test sequences including a variable number of gestures (2-5) within the recording, detect the gestures and estimate the temporal stamp of their start and End

Dataset

Data has been collected from 5 users recording both single gestures and sequences. The gesture dictionary includes different kinds of gestures, belonging to three types: static, where the hand reaches a meaningful pose and remains in that pose for at least a second, coarse, where the meaning is given by the hand trajectory, fine, where the recognition should also rely on fingers movements. The gesture dictionary is the following

- one (static)

- two (static)

- three (static)

- four (static)

- OK (static)

- pinch

- grab

- expand

- tap

- swipe left

- swipe right

- swipe V

- swipe O

The sensor used to capture the trajectory is a LeapMotion and the sampling rate was set to 0.05s.

Each file in the dataset referes to a single run and each row contains the data of the hand's joints captured in a single frame by the sensor.

All data in a row is separated by a semicolon. Each line includes the position and rotation values for the hand palm and all the finger joints. The line index (starting from 1) coresponds to the timestamp of the corresponding frame.

The structure of a row is summarized in the following scheme:

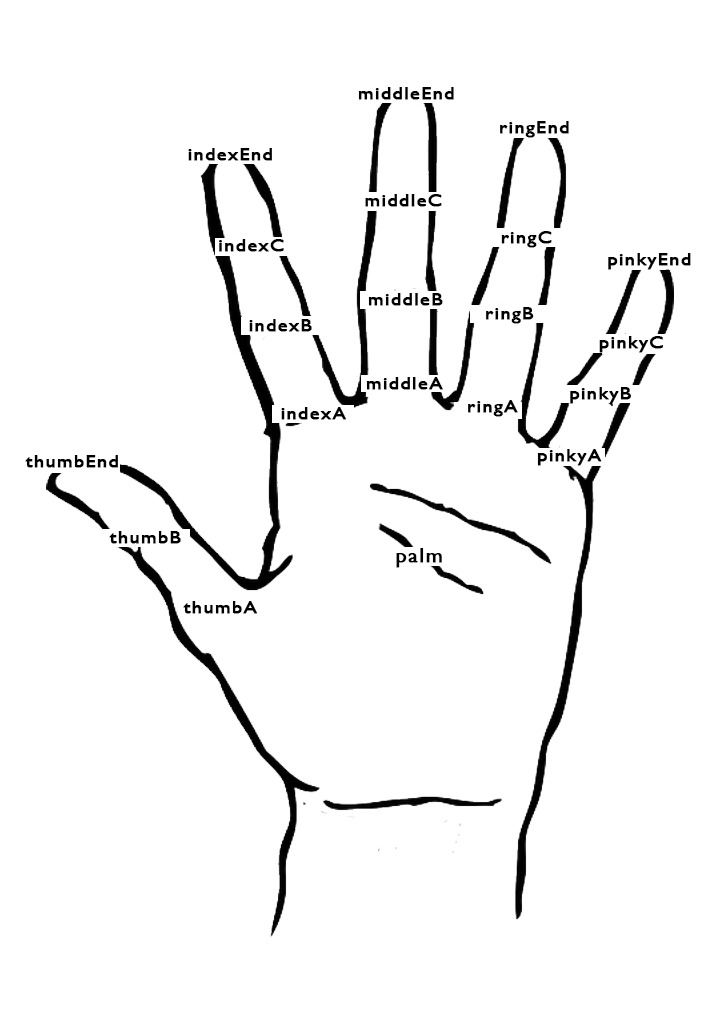

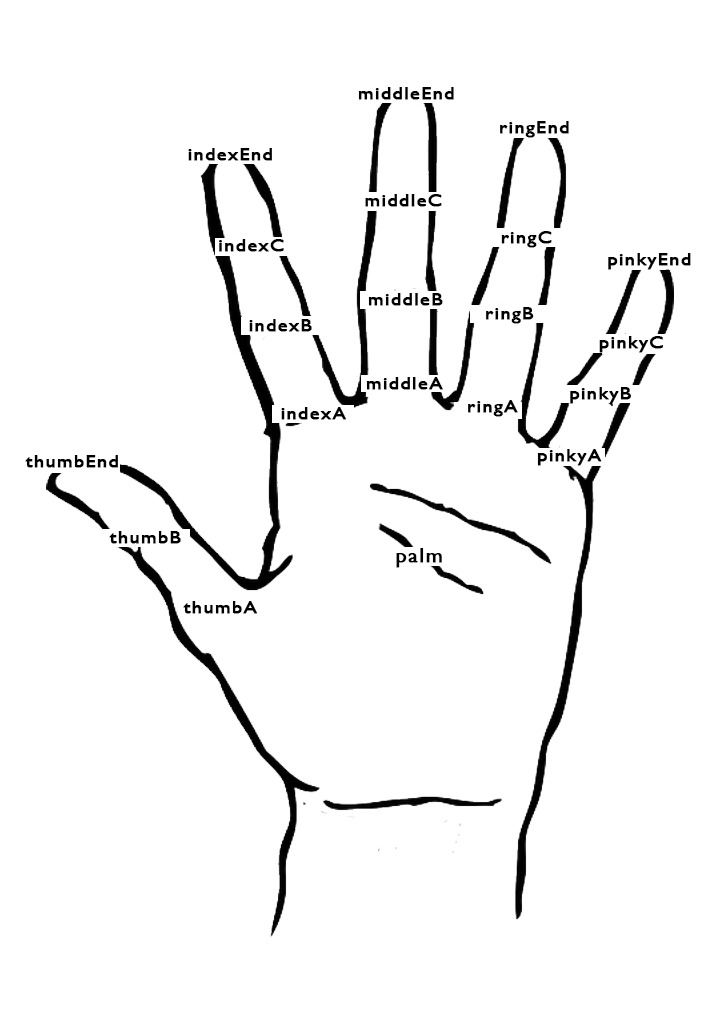

palmpos(x;y;z);palmquat(x,y,z,w);thumbApos(x;y;z);thumbAquat(x;y;z;w);thumBpos(x;y;z);thumbBquat(x;y;z;w);thumbEndpos(x;y;z);thumbEndquat(x;y;z;w);indexApos(x;y;z);indexAquat(x;y;z;w);indexBpos(x;y;z);indexBquat(x;y;z;w);indexCpos(x;y;z);indexCquat(x;y;z;w);indexEndpos(x;y;z);indexEndquat(x;y;z;w);middleApos(x;y;z);middleAquat(x;y;z;w);middleBpos(x;y;z);middleBquat(x;y;z;w);middleCpos(x;y;z);middleCquat(x;y;z;w);middleEndpos(x;y;z);middleEndquat(x;y;z;w);ringApos(x;y;z);ringAquat(x;y;z;w);ringBpos(x;y;z);ringBquat(x;y;z;w);ringCpos(x;y;z);ringCquat(x;y;z;w);ringEndpos(x;y;z);ringEndquat(x;y;z;w);pinkyApos(x;y;z)pinkyAquat(x;y;z;w);pinkyBpos(x;y;z)pinkyBquat(x;y;z;w);pinkyCpos(x;y;z)pinkyCquat(x;y;z;w)pinkyEndpos(x;y;z)pinkyEndquat(x;y;z;w)

where the joint positions corresponds to those reported in the following image.

Each joint is therefore characterized by 7 floats, three for position and four for the quaternion, the sequence starts from the palm, then the thumb with three joints ending with the tip and then the other four fingers with four joints each ending with the tip.

Training and test data download

We provide a set of 36 example gestures for each of the 13 gesture classes. We include only the gesture sequences, as text files in the format described above, included in folders labelled as the numeric gesture code (1 to 13) Here is the download link

72 test sequences including a variable number of gestures to be identified and located are included in the test archive. Here is the download link

Task and evaluation

Participants must provide methods to detect and classify gestures of the dictionary in the set of 72 test sequences.

Results will be provided as a text file NAME_SURNAME.txt including a row for each trajectory with the following information

trajectory number;predicted gesture label; predicted gesture End time stamp; predicted gesture End time stamp; predicted gesture label; predicted gesture End time stamp (row index in the corresponding file); predicted gesture End time stamp. For example, if the algorithm in the first sequence detects a "pinch" and a "one" and in the second a "three", a "swipe left" and a "swipe O", the first two lines of the results file will be like the following ones:

1;6;12;54;1;82;138;

2;3;18;75;10;111;183;13;222;298;

...

Executable code performing the classification should be provided with the submission with instruction for its use.

The evaluation will be based on the number of correct gestures detected and the false positive rate

Timeline

- March 3: dataset available

- March 10: Registration deadline: participants should sEnd an e-mail to register for the contest to andrea.giachetti(at)univr.it

- April 1: Confirmation of participation

- April 15: results sent by participants and collected together with executable code and methods descriptions

( one-page latex file with at most two figures )

- May 3: submission of the track report to the reviewers

Contacts

Ariel Caputo (email: fabiomarco.caputo(at)univr.it ) - VIPS Lab, University of Verona

Marco Pegoraro (email: marco.pegoraro_01(at)studenti.univr.it ) - VIPS Lab, University of Verona

Andrea Giachetti (email: andrea.giachetti(at)univr.it ) - VIPS Lab, University of Verona