SHREC 2019: Track on

online detection of simple gestures from hand trajectories

General description

This track is dedicated to the problem of locating a simple gesture in longer 3D trajectory recorded in a realistic context where users acted on a virtual 3D interface in an immersive Virtual Reality environment. Given a set of examples of full trajectories with annotated gesture temporal location, users should detect gesture label and gesture end temporal location on a test set of test trajectories with an online recognizer.Dataset creation

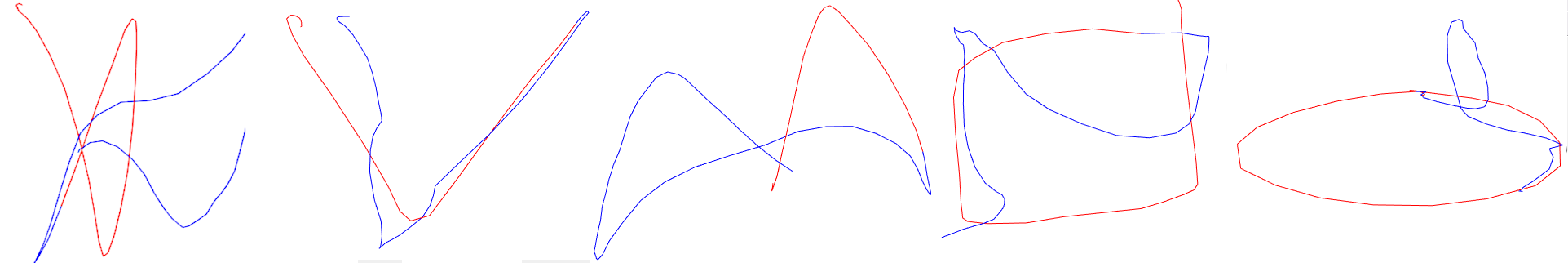

Data has been collected from 13 users performing an interaction task with their hands on a virtual UI. During each run the user had to perform one and only one of the following gestures with their index fingertip:- Cross (X)

- Circle (O)

- V-mark (V)

- Caret (^)

- Square ([])

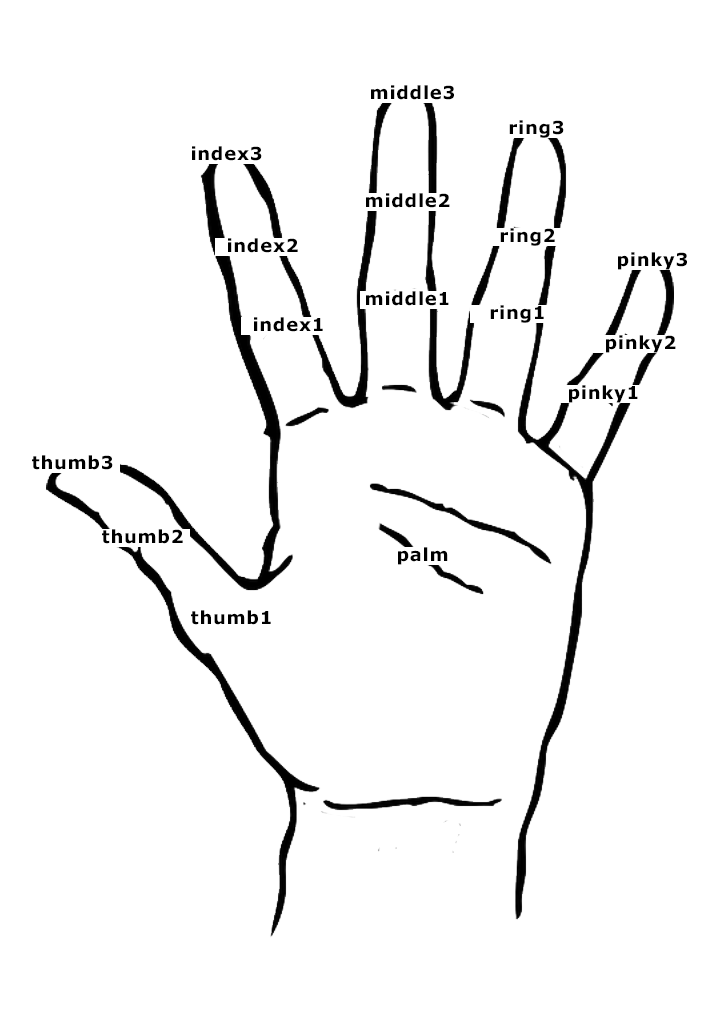

The structure of a row is summarized in the following scheme: frame_ID;timestamp;palmpos(x;y;z);palmquat(x,y,z,w);thumb1pos(x;y;z)thumb1quat(x;y;z;w);thumb2pos(x;y;z)thumb2quat(x;y;z;w);thumb3pos(x;y;z)thumb3quat(x;y;z;w);index1pos(x;y;z)index1quat(x;y;z;w);index2pos(x;y;z)index2quat(x;y;z;w;index3pos(x;y;z)index3quat(x;y;z;w);middle1pos(x;y;z)middle1quat(x;y;z;w);middle2pos(x;y;z)middle2quat(x;y;z;w);middle3pos(x;y;z)middle3quat(x;y;z;w);ring1pos(x;y;z)ring1quat(x;y;z;w);ring2pos(x;y;z)ring2quat(x;y;z;w);ring3pos(x;y;z)ringy3quat(x;y;z;w);pinky1pos(x;y;z)pinky1quat(x;y;z;w);pinky2pos(x;y;z)pinky2quat(x;y;z;w);pinky3pos(x;y;z)pinky3quat(x;y;z;w)

where the joint positions corresponds to those reported in the following image.

As the gestures are just hand trajectories, palm or index tip trajectories should be sufficient for the identification

Annotated data used for training will contain 2 special rows, both marked by the frameID value set to -1 and with empty values in the remaining fields. The first marks the beginning of the gesture while the second one marks the end of it. The first special row also ends with a special character (either X, O, ^, V, []) to indicate which of the 5 gestures has been performed in that run.

Training data

A training set with annotated gestures can be downloaded now from this linkTask and evaluation

We ask participants to estimate with an online method the correct gesture id of a set of unseen trajectories, with a method trained with the on a set of non-annotated test data To simulate online recognition the methods proposed should respect the following constraints:- the method should try to correctly classify the gesture contained in the trajectory and also estimate 2 values (t1,t2) representing the guessed beginning and end frame of the gesture.

- the method should process the trajectory data row by row (therefore simulating temporal sequentiality of online runs) and guess the information stated in the previous point without processing beyond t2.

The evaluation will be based on the classification accuracy and on the correct localization of the gesture in the time sequence.

Timeline

- Febraury 10: dataset available

- Febraury 10: participants send an e-mail to register for the contest

- March 5: results sent by participants and collected together with methods descriptions

- March 15: submission of the track report to the reviewers